JAYCOR Awarded Tender to Design & Build SANSA’s Micro-Data Centre

JAYCOR is very proud to be an integral part of the construction and deployment of the South African National Space Agency’s (SANSA) new Space Weather Centre in the Western Cape, due to be completed and launched later this year.

SANSA was formed in 2010, however, South Africa’s involvement with space research and activities began much earlier, helping early international space efforts to observe Earth’s magnetic field at stations around the Southern parts of Africa.

The research and work carried out at SANSA focuses on space science, engineering and technology that can promote development, build human capital, and provide important national services. Much of this work involves monitoring the Sun, the Earth, and our surrounding environment, and utilizes the collected data to ensure that navigation, communication technology and weather forecasting and warning services function as intended.

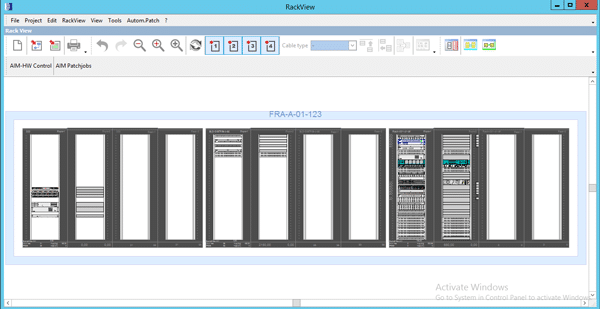

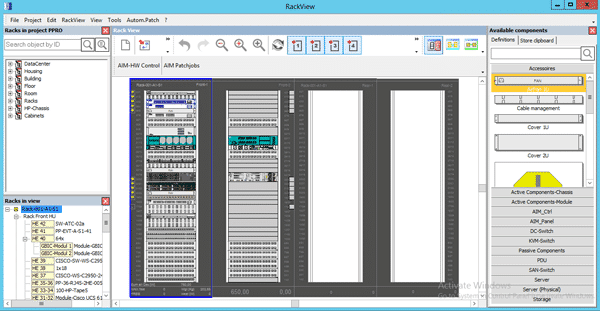

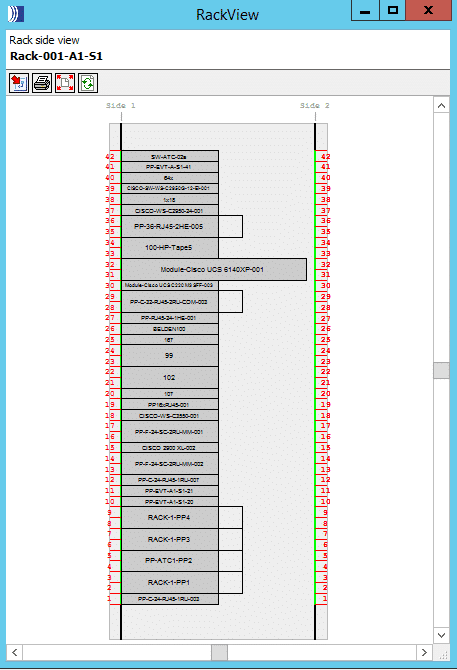

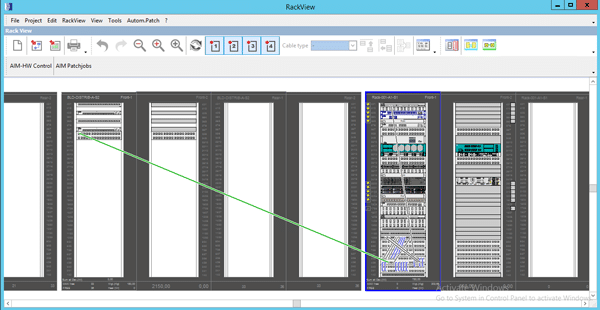

JAYCOR earlier this year was awarded the tender to design, supply, construct, and commission the Space Weather Centre’s micro-data center. A central and key component of the hybrid cloud/on-prem solution SANSA requires to deploy the center’s mission-critical services.

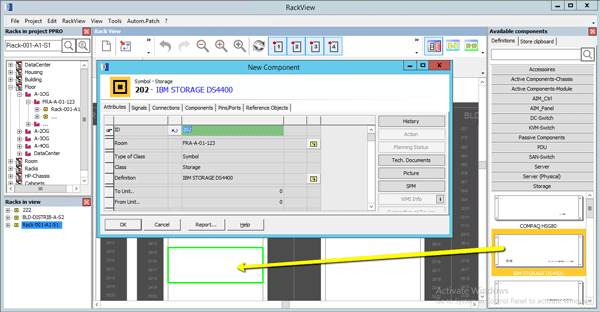

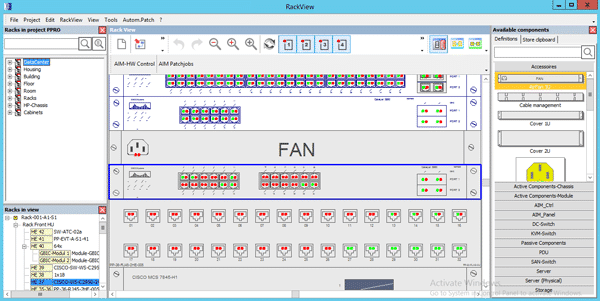

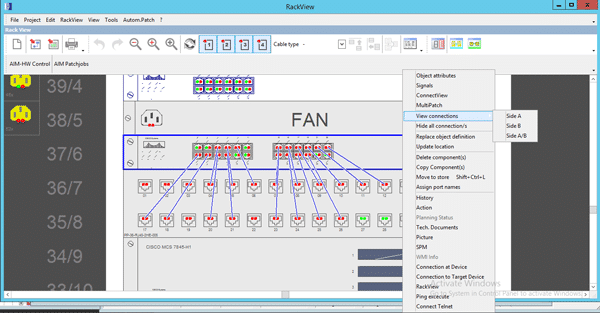

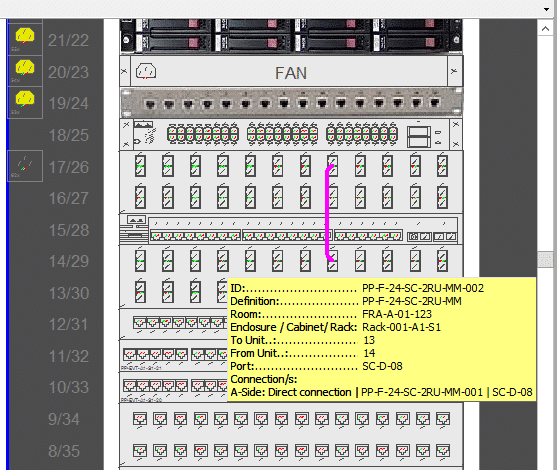

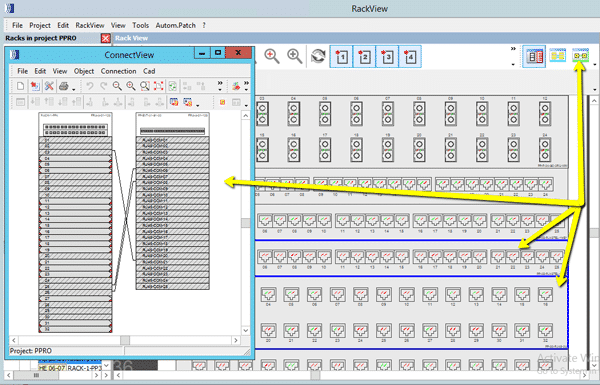

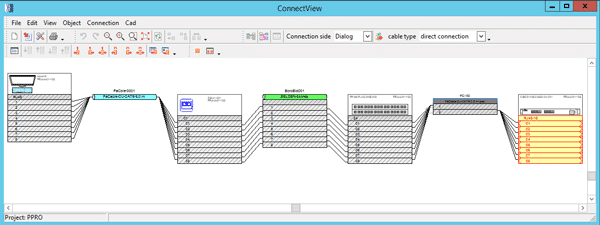

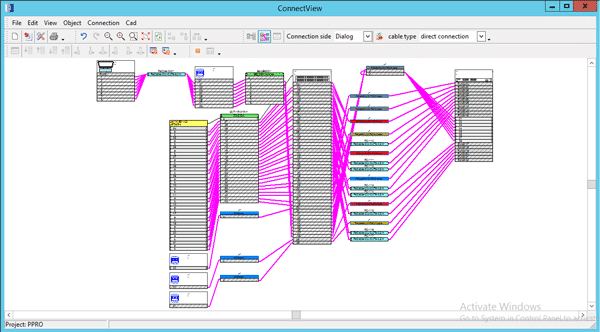

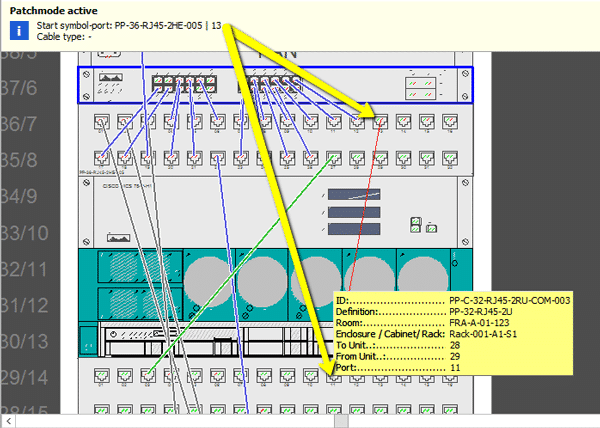

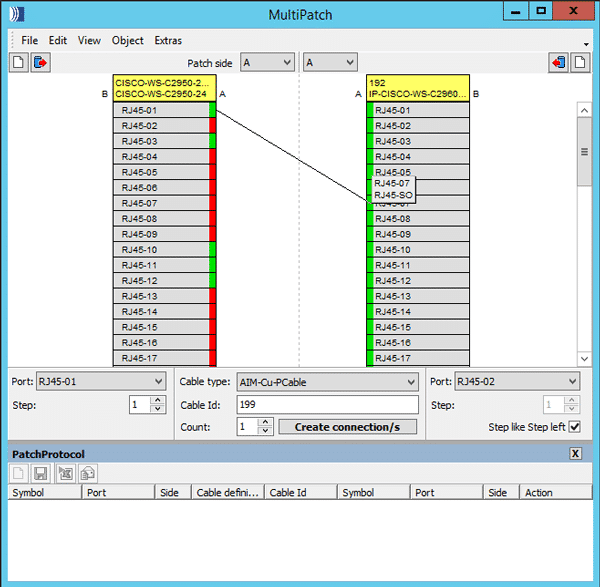

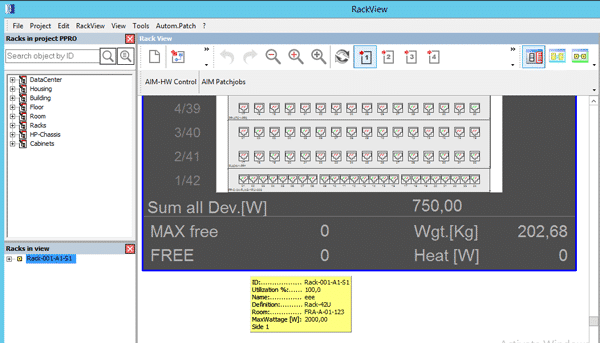

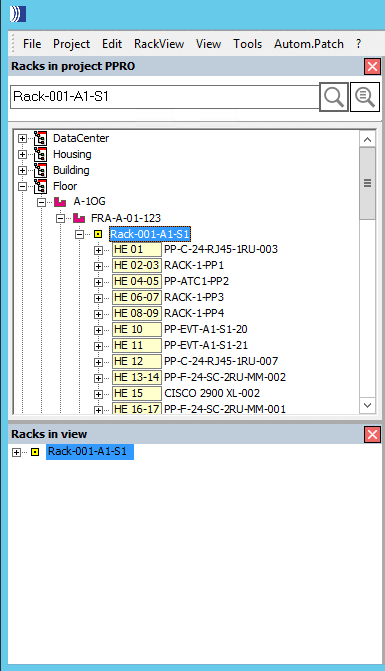

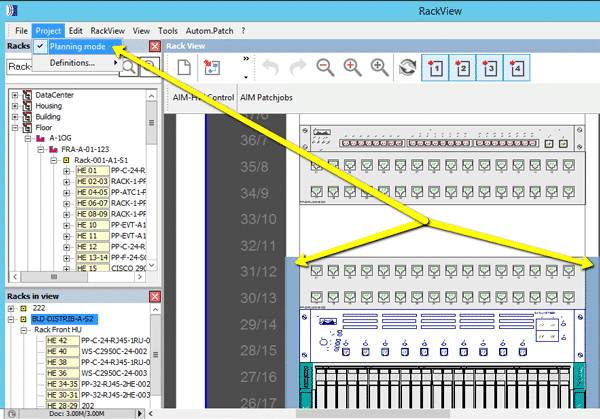

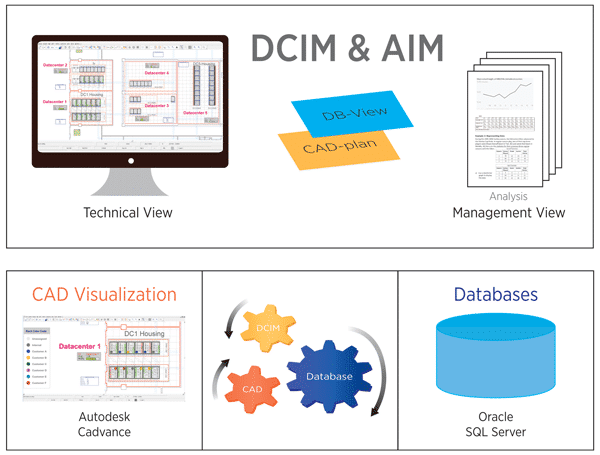

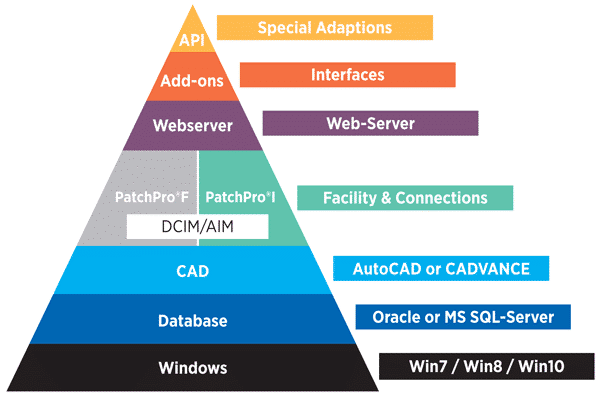

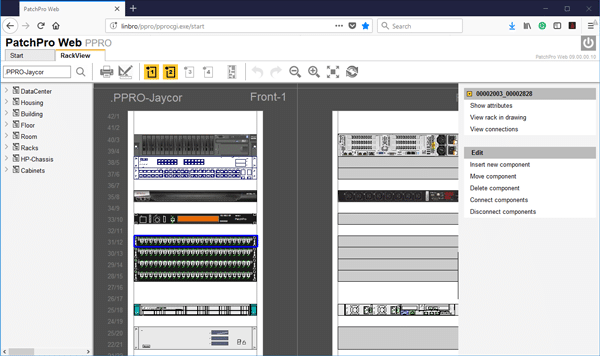

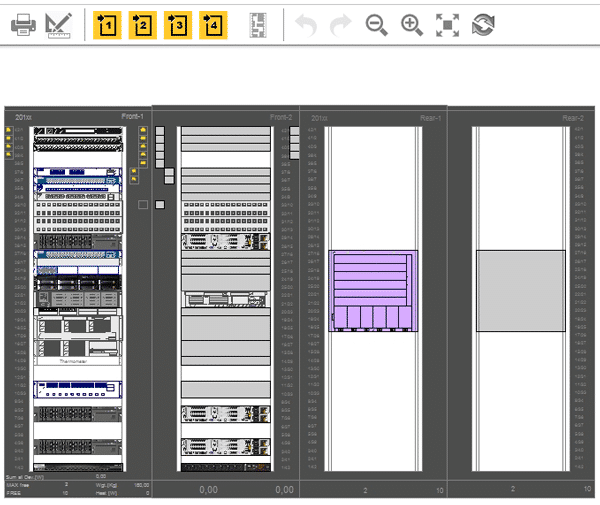

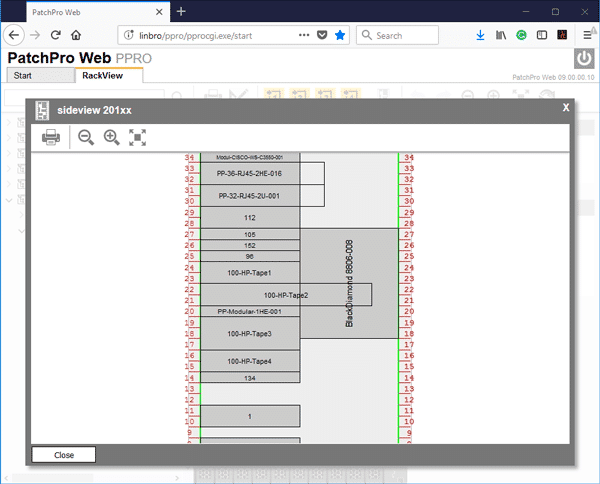

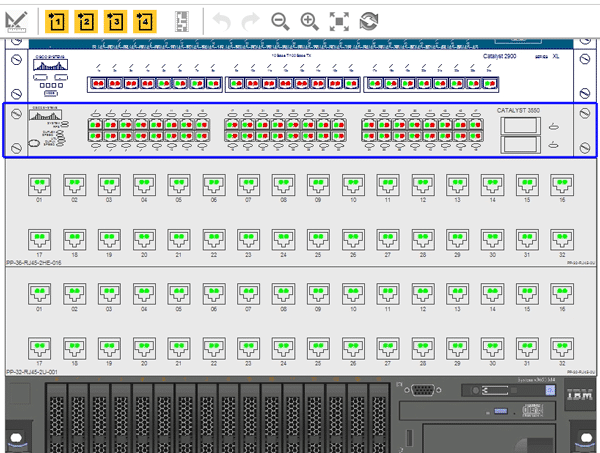

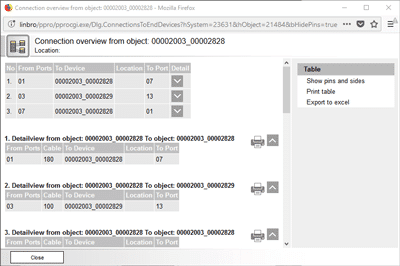

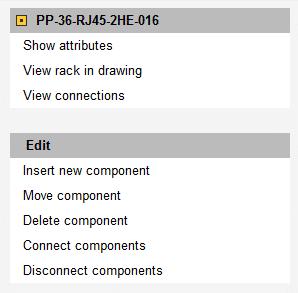

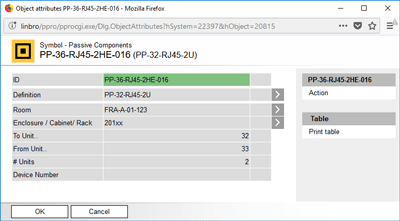

The scope of work for the 36m2 micro-data center included all racks and related infrastructure, external and rack UPS’s, PDU’s, access control, cooling, environmental monitoring, and the fire-suppression systems. With all components to be managed with a Data Center Infrastructure (DCIM) asset management software. JAYCOR’s expertise in connected infrastructure and the flexibility and agility to deliver a turnkey solution to SANSA with best-in-class OEM brands, and solutions to meet the scope of work, were key factors in being selected as a partner on the project.

As we celebrate Space Exploration Day this week and edge closer to the completion of the project, we take this opportunity to commend and celebrate all the people, past and present, committed to the advancement of the space science. And look forward to the future as we help play a small role in the advancement of South Africa’s space agency and the sciences.

Greg Pokroy

CEO

JAYCOR International